There is no denying the fact that better click-through rates in emails lead to more website visitors. But in order to maximize CTRs, any marketer would first need to maximize the open rates. Add to the mix the complexities of modern-day emails and even veteran marketers would need breathers to recoup.

How do you then reach the most optimal version of your emails? The answer lies in A/B testing to reap better results from your marketing efforts.

In this guide, we discuss the what, why, and how of improving your email marketing campaigns with the help of A/B testing.

Table of Contents:

- What is A/B testing of Emails?

- Why should you A/B test emails?

- Key statistics you should know

- What are the key components of A/B testing in Emails?

- Which variables can you test in your email campaigns?

- Which variables should you NOT A/B test in your email campaigns?

- Factors to keep in mind while carrying out A/B Testing

- Important terminologies to know

- What are the different methods to A/B test emails?

- Key steps or practices to A/B testing email campaigns

- How to determine the sample size?

- How many days should you run your A/B tests?

- What role does the testing platform play?

- So, what’s next?

What is A/B Testing of Emails?

To put it simply, A/B testing or A/B Split testing is the process of sending out multiple versions of your emails with different variables to test their performance. This gives you more control over your email marketing campaigns and helps you see which version of the email performs the best.

The idea is to send one version of the email to one subset of your subscribers and another version to another subset. Then, you measure the performance (read rates, click-through rates) of both versions to see which one performs better. You then roll out the winner across all your other subscribers.

In other words, A/B testing of emails shows marketers how to evaluate, compare, and decide between multiple versions of the same email.

Why should you A/B Test Emails?

Email A/B testing uses statistical methods to prove which version of an email brings you the best results. As such, it allows marketers to test various variables in their emails and then see actual results before rolling out improvements across all their subscribers.

This naturally brings forth several benefits that boost your campaigns as well as improve your overall marketing efforts:

- Better email campaign metrics.

- Enhanced list-building efforts.

- Improved results from your campaigns with little to no extra effort on your part.

- Improved email CTR rates and Email Click-through-rates.

- Helps you build better relationships with your subscribers.

- Opportunity to test various variables before rolling out changes across your entire subscriber base.

- Helps you understand which subject line has the best open rates.

- Increased email deliverability rates.

- Help gather invaluable customer data for future campaigns.

- Help identify key influencers in your subscriber base and use this information to send exclusive offers only to them.

- Identifies the best-performing CTA for current and future campaigns.

- Picks the most engaging image and/or video in the email.

- Helps you understand which pretext header is leading to the best open rate.

- And so on!

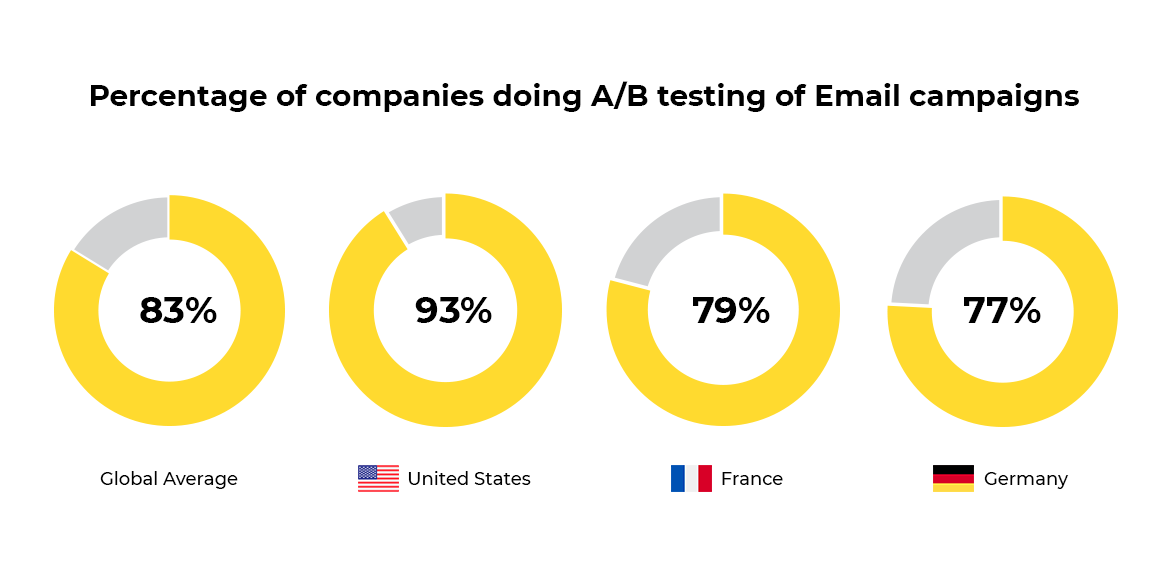

Key statistics about A/B testing of emails that you should know

Let’s take a look at some key statistics that depict the importance of A/B testing in emails. These have been curated and sourced from 99firms:

- 89% of companies in the US A/B test their email marketing campaigns.

- A whopping 40% of global companies test the subject lines of emails.

- Clear subject lines get more engagement and lead to 541% more responses and fluffy ones.

- 37% of marketers test email content, 36% test the date and time of sending, and 23% test email preheaders.

What are the key components of A/B Testing?

A/B testing requires marketers to run campaigns with multiple variables. Each of these variants (versions) should be tested against each other – and this is where the science comes in.

By running A/B tests across all possible combinations of your email variations, you are likely to come up with an optimal version that would convert better than any other version. You can roll out the same across your entire subscriber list to establish a benchmark for future email campaigns.

With that being said, here are the key components that you should consider when carrying out A/B Testing in Emails:

- Call-to-Action – What CTA would work best at engaging your audience? After all, this is where your research efforts will pay off.

- Subject Line – Which subject line would drive the subscribers to open your email? When testing a new email, start with its subject line. You can then test other variables in subsequent tests.

- Images and Videos – Which image or video would bring you the best engagement rate? Remember, it is advisable to split test between two different images in a single email.

- Pre-header – Which pre-header would engage your subscribers the most? This is a good way to improve CTR from the inbox.

- Inline images and Videos – Inline videos or images can help boost engagement rates in emails. Test between multiple images and/or inline video formats to find out what works best for your audience.

- Text – Subject line and body content are both important factors in helping you increase click-through rates. Make sure to split test between two different email texts before rolling out your preferred version across all subscribers.

- Landing Pages – Landing page testing has a direct impact on your email campaign’s conversion rate. A/B test the landing page that is linked to your email to increase conversions for the campaign.

- Delivery Timing – This is crucial to understand the best time of the day (or month) for sending email campaigns. To establish a benchmark, send an A/B test campaign for each hour of the day, starting at 10 am and ending at 11 pm.

- Retargeting Emails – Retargeted emails have higher CTRs because they are sent only to those users who have shown an interest in your product or service. You can improve open rates by A/B testing various retargeting approaches – from the image to its text and CTA, etc.

In short, A/B testing provides measurable insights into what is working and what isn’t in your emails. It can help you identify which subject line has a higher open rate or which image would leave a greater impact on subscribers.

Which variables can you A/B test in your email campaigns?

Email marketers have a lot of flexibility when it comes to testing different variables. In fact, any factor that might affect the success of an email campaign is open for testing:

- HTML Emails – Test between different content types such as text, H1-H6 tags, images, videos, links.

- Subject Line Length – Test between short and long subject lines to see which performs better.

- Personalization and Segmentation – Use the data you have on your subscribers to personalize your email campaigns and deliver better results.

- Clickable Links – Split test between multiple types of clickable links such as plain text, image buttons, and so on.

- Text vs Visual Content – Instead of using a simple text email for your campaigns, throw in an image or even a video. You can split-test between different forms of visual content to find out what works best for your audience.

- Preview Text – Test preview texts in your emails to understand which version would work better for you.

- Above the Fold vs Below the Fold – A/B test which version would perform better by including key email components first above the fold and then below the fold.

Which variables should you NOT A/B test in your email campaigns?

While you can split test most variables related to an email campaign, there are some that cannot or should not be tested:

- Campaign Name – You should not try A/B testing the campaign name of an email. While it’s important to identify and address the target audience of your campaigns, changing its name would confuse subscribers and result in a drop in engagement rates.

- Campaign URL – The same goes for the campaign URL as well as the landing page URL to which the CTA in your email navigates to. Both need to be consistent so that users know where they are headed when they click on an email link. Keeping the landing page URL different from the campaign URL may confuse visitors, especially if they want to return to the page later.

- Marketing Automation Software (MSA) – This cannot be tested via an A/B test since it depends on the use case. For instance, an MSA could include several triggers based on customer behavior. So, you cannot change their order or remove some without affecting the entire automation process.

A/B testing is an excellent way for marketers to establish what works best for their audience and weed out ineffective email components. However, even though A/B tests can help determine which version performs better, it’s not always easy to identify the reason behind a higher CTR. That’s why it is crucial for marketers to establish an optimization approach that would allow them to improve their email campaigns systematically.

What are some factors to keep in mind while carrying out A/B Testing in Emails?

The more variables you test for, the longer it will take to carry out your tests. For instance, if you were testing 20 different email variables by splitting them into two alternative tests (2 x 20), each test may take 1-5 days to complete (for example). And with each passing day, the relevancy of your email’s subject line diminishes. This is why you should test for just two or three variables at a time so that your tests are completed in one or two days.

A/B testing requires you to send out different versions of the same email at the same time! This would mean that your A/B test will not run in isolation and can impact your deliverability rate. If you wish to run an A/B test without affecting your deliverability, you can send it to a separate email sending list.

What are some of the benefits of A/B Testing in Emails?

A/B testing is extremely useful when it comes to improving email click rates, open rates, and conversion rates. Much like scientific experiments where one formulates a hypothesis and tests it against another version of itself, marketers can test each variable of an email campaign to find out which one performed better. A/B testing can also be very beneficial in improving content engagement rates by understanding the preferences of your audience.

It can improve overall marketing productivity because you won’t have to wonder if any single component of your email is responsible for low click-through rates or high unsubscribe rates.

Important Terminologies to Know Before You A/B Test Emails

Key email A/B testing terms include:

- Open rate – This is an engagement metric that calculates the percentage of users who opened your email after receiving it.

- Click-through rate – This measures the number of users who clicked on a link in an email after receiving it.

- Unsubscribe rate – The number of subscribers who clicked on the “unsubscribe” button to opt out.

- Conversion rate – This calculates the number of email recipients who performed an action you would like them to take.

- Population – The number of email recipients in your list who typically get emails delivered to them. Note that this is not the number of people you send the emails to since it excludes undelivered email recipients.

- Deliverability rate – The number of emails sent out that were successfully delivered to inboxes, not including those marked as spam or blocked by ISPs.

- Statistics-based approach – An approach where marketers test different variables in isolation and don’t run the tests simultaneously to avoid having a negative impact on deliverability.

- Multivariate testing approach – An approach where marketers test multiple variables at the same time in order to identify the core reasons for higher click-through rates or better open rates. This would allow them to improve their email campaigns systematically rather than just focusing on one variable at a time.

- Sample – A representative group of users who are similar to your target audience that would be used for testing emails.

- Test set-up – This includes defining the variables that would be tested for each email, carrying out tests with a representative sample, and identifying the winner of each test.

- Test timeline – This is how long your tests would take to complete because it includes the duration that your email will be out in the mail and the number of days required for testing and evaluation.

- Hypothesis – A statement that you’d like to validate by performing an experiment, such as “An image in the carousel is more engaging than an image without it.”

- Data/metric – A data point that shows how your tests are progressing, such as click-through rates or open rates.

- Confidence Interval – A range of values between which you’d be confident in predicting the true population mean (average).

- Confidence Level – The extent to which you’d be confident in predicting the mean of a test. The more standard deviations away from the mean, the higher your level of confidence.

What are the different methods to A/B Test Emails?

For best results, it is always advisable that your email marketing solution provides the functionality to A/B test emails. If it doesn’t, here are some methods through which you can A/B test your email by segmenting your email lists:

- Method 1 – Create two versions of the same email and send them out to your audience. This method helps you test your subject lines, email body, and your calls-to-action (CTAs).

- Method 2 – Test two different email components against each other. This method helps you test design elements such as the use of different images, button colors, etc., in a single email template. Such tests will allow you to know which CTA is more likely to lead to higher conversion rates.

- Method 3 – Test your email campaigns by sending them to different segments of your audience. This method helps you test two variations of the same email against each other, but this time within a single list using parameters like gender, age groups, etc.

Key Steps or Practices to A/B Testing Email Campaigns

To the uninitiated, setting up a brand new email campaign for A/B testing can be a daunting task. Take a note of the following best practices and you should be clear of any major mistakes:

1. Begin with a Hypothesis: You must always begin by formulating a hypothesis and then presenting it as a simple statement or question.

For instance, if you wanted to test the impact of an email’s content on its conversion rate, your hypothesis may sound something like this: “An email with actionable tips around our product will have higher conversion rates than emails without”.

Define the elements that would need to be improved or edited in order to test this hypothesis. Accordingly, you can determine if the changes are contributing positively or negatively to your desired outcome and then select the best-performing version of the email.

2. Select the variable that you want to test: Your first step should be to pick the variable that you want to test. The best way to do this is to pinpoint the variable that is most likely to affect your email engagement and conversion rates.

This could be your subject line, email body, or even a particular call-to-action. Some examples of these can be:

- Subject Line: ‘Buy Now’ Vs. ‘Check out the products’

- Email Body: Short body text Vs. Long body text

- Testimonials: ‘Testimonial X’ Vs. ‘Testimonial Y’ (or no testimonial at all)

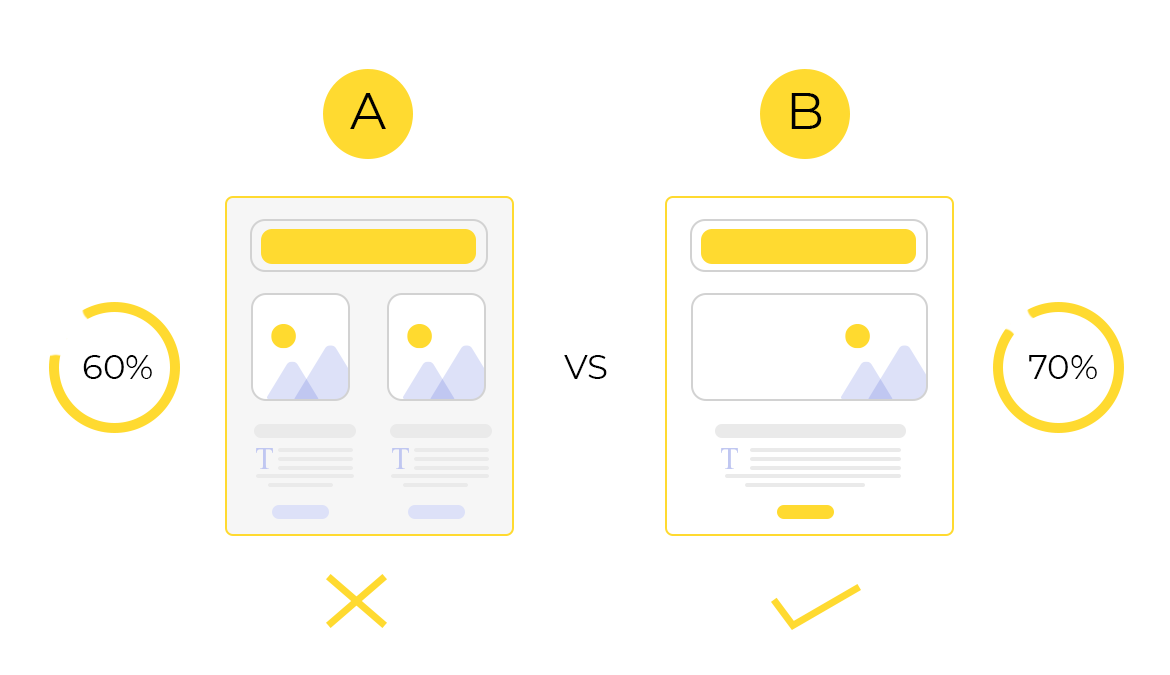

- Message Layout: ‘Single column’ Vs. ‘Double column’

- Variable Location: ‘Top placement’ Vs. ‘Bottom placement’

- Personalization: ‘First name’ Vs. ‘Mr. First name, Last name’

- Specific Offers: ‘Discount of 40%’ Vs. ‘No Shipping Charges!’

3. Test one variable at a time and keep logs: As we mentioned before, A/B testing is about gathering statistics and then making inferences based on that data. To get accurate results, it’s important to test one variable at a time and keep logs of the variables you’re testing against each other. For instance, if you were comparing two different images used in an email for determining its conversion rate, note the number of clicks, forwards, unsubscribe, etc. on both the images separately.

The best way to keep track of this data is through spreadsheets. They allow you to create charts and graphs that will help you analyze your collected data better.

4. Don’t compare two different variables: You must not, under any circumstances, try and compare the results of two completely different variables. For instance, you must never try to compare data that has been obtained through personalizing an email with messages that are sent out without any personalization at all.

This is because it isn’t just one variable that is responsible for your email engagement. There are several elements that go into making your email template, which you must take into account if you want to make accurate A/B testing marketing inferences.

5. Know what you’re looking for: With A/B testing, before running the test, it’s important to always know exactly what you are expecting. This is because it is sometimes difficult to determine the value of variables after you’ve completed your entire testing cycle. For example, just because an email with a particular subject line had more clicks than one with any other subject line doesn’t mean that it will work better for your business goals. It may have simply gone with the trend or received more attention than the other emails simply because it was the newest in the lot.

6. Use a single code for all your variations: While you’re testing, ensure that everything is using a single code base and that only one variable is being tested at a given time.

Making changes to your email templates while you’re still running tests can actually skew your results and cause you to lose valuable information. If this has already happened, ensure that you recreate your test and start fresh.

7. Determine the sample size: In order to execute an effective A/B test for your email marketing campaign, it is important to determine the ideal sample size.

Marketers often create 2 groups with 50% of subscribers in each (when testing 2 email versions). But for better engagement results, it is advisable that you create two email groups each with 10-15% of your subscribers (in case you have 1000+ entries in your email list). You can then roll out the best-performing email to the remaining subscriber base for maximum bang for the buck (read ROI).

8. Determine the duration: What should be the adequate time window to conduct an A/B test? It is important to answer this since the accuracy of your test results often depends on the timeframe of the test.

For instance, consider that you send 2 versions of an email (version X and Y) with varying headlines to 1000 subscribers each. After 6 hours, the open rate is 0.5% for X and 0.6% for Y. But you may find that the accuracy of the test would be more if you wait a bit longer, making version X the actual winner with an open rate of 0.8%. 24-48 hours seems to be a comfortable sweet spot for most email campaigns (provided that you have sent the emails at the most engaging date and time for your target audience).

9. Create different variations: The next step should include creating different variations of the same email design/layout where you can insert different versions of the variable that you are testing.

Remember, your goal here is to find out which version works best for your audience. In case you are tweaking the email design, it is important that you keep the main layout or design of your email intact. Also, it is best not to make more than 2-3 significant changes to your original template.

10. Get Your Numbers Right: Just confirming that your email is opening or being clicked upon does not indicate a successful test. You must work out the numbers right, i.e., you will have to measure each variable against a singular metric which either goes up or down (depending on how it performs) and directly affects the ROI.

For the example in point 8, the conversion rate would be the metric to watch for as your desired result.

11. Keep track of results: A good testing platform should provide a dashboard where marketers can monitor the daily progress of their email campaigns and see which version of the email is leading the way. Keep a close watch on these results since they can help you better plan your email campaign calendar in the future.

Note: It is always best to test your email campaigns at regular intervals so that you can ensure higher engagement and conversion rates. A/B testing your email campaigns against your original template will also help you know whether changes in the design and layout of your emails are required or not. Implement these tips and track the progress of your next A/B test for better conversion rates.

12. Get Feedback From Your Target Audience: Once you have your results, don’t be too quick to decide on the winner. Remember that you are testing two (or more) variables against each other and it is entirely possible that both emails performed equally well or poorly depending on what you were trying to test. To get an unbiased perspective, share your results with a few unbiased individuals and get their feedback on which email performed better.

The Bottomline: A/B testing is an integral part of email marketing campaigns because it’s based on the key principle of statistical inference. This tests one variable at a time and then makes inferences based on that data. However, before you can get started with A/B testing, you must first have a clear understanding of all the elements that go into your email template and why they must be tested together. By keeping track of data and identifying results accurately, you will be able to make more accurate inferences in the end.

A/B testing is a fantastic way to enhance your email marketing campaigns and ensure better audience engagement. While it may sound intimidating at first, if you follow the best practices mentioned above, you will be able to conduct a successful test in no time!

How to Determine the sample size for an A/B Test of Emails?

In this section, let’s understand how to actually calculate the sample size and timing of your email A/B tests. Some prerequisites or assumptions here include that the emails can only be sent to a finite set of audiences. And that you will be taking a small chunk of your entire email list to statistically deduce the best performing version of the email.

Here’s the best way to calculate your sample size:

- Calculate whether you have enough contacts in the email list to implement A/B testing. The least number of email contacts that are recommended for successful A/B testing is usually 1000. In case you are unable to meet this threshold, it is best to segment your entire list into 2 equal sets and then determine the best performing email from a list of 2 email versions. The result of the tests can then be used for similar future campaigns.

- Calculate the time it will take in hours or days for each campaign to yield a statistical significance in results. The A/B test can be considered complete when your sample size suffices and when there is enough data collected from all the tests. Based on this, you can work backward to select the ideal sample size for the most accurate test results.

- Make an assumption about how many participants will choose, or convert, on your email offers in order to find the sample size corresponding to that level of conversion. For example, if you are planning on converting 20% of your contacts list (1000 contacts) through an email offer, then use an estimate of 200 for your sample size. If you are expecting a 10% response rate, choose 100 contacts per email version (from a pool of 2 email versions) as the required sample size.

How Many Days Should You Run Your A/B Tests?

The number of days required for an A/B test will depend on the number of contacts in your email list. For example, let’s assume that you have 2000 email contacts and want to run 40 tests that can be carried out over 20 days. By running 4 tests per day, you can get statistically significant results by the 10th day.

Had the number of contacts been 4000, you would have got statistically significant results by the 5th day.

What role does the testing platform play in A/B Testing Email Marketing?

A good email marketing platform can help you run an A/B test on variables that usually affect open rates, click-through rates, conversion rates, bounce rates, and many other metrics. It can also help you in tracking and analyzing your test results without any coding or design knowledge – all that you need is a few clicks to get the job done! This way, email marketers can run tests while improving their overall productivity and experience.

If you have not yet decided which email A/B testing platform to go for, here are some leading options that you can opt for today:

- Klaviyo: This email A/B testing and personalization platform makes it easier for companies to turn more website visitors into loyal customers. It analyzes user behavior and helps businesses send highly targeted emails that generate higher revenue.

- Mailchimp: This is one of the most popular email service providers in the industry. It is powerful, yet easy to use and comes loaded with features that can help you conduct Mailchimp A/B testing on your email campaigns.

- HubSpot: This email marketing platform allows marketers to design and send beautiful emails while tracking the full customer journey. It also optimizes each campaign for maximum impact without any complex code or technical knowledge.

- Totango: This platform can help businesses identify their most active users, recognize potential sales opportunities, and increase engagement with personalized product recommendations. It also helps marketers in optimizing email distribution schedules to maximize revenue.

- Retention Science: Powered by machine learning technology, this platform enables marketers to personalize the customer experience by detecting online actions that matter most to each segment.

- Litmus: This email testing platform helps marketers take their emails from plain text to beautiful HTML in a few simple clicks. It also ensures that marketers run A/B tests without any technical or design resources.

So, what’s next?

So, there you have it! Now you know the basics of A/B split testing for your email marketing campaigns. You can start getting a few results right away. Just remember that the more relevant variables you test, the easier it will be for your results to become statistically significant. This way, you can achieve the best results from your tests.

If you’re still struggling with getting started in A/B testing or if you’re an experienced marketer and simply want to accelerate your performance with A/B testing, we at Email Uplers can help you with all your email marketing endeavors. We have a team of email marketing experts who can help you achieve your email goals. With us, you don’t have to worry about wasting resources on sending unsuccessful emails anymore.

Kevin George

Latest posts by Kevin George (see all)

A Quick Guide to Laying the Foundation for Long-term Success in SFMC

Guide to Email Marketing Automation for Healthcare and Wellness Organizations